Dave,

I can help with the real-time ingest from AWS Kinesis and my colleague will follow-up regarding the secondary indices. Secondary indexing, as well as collecting appropriate stats to inform query planning is a feature that is actively being improved for our next release and he has the best insight into the latest development.

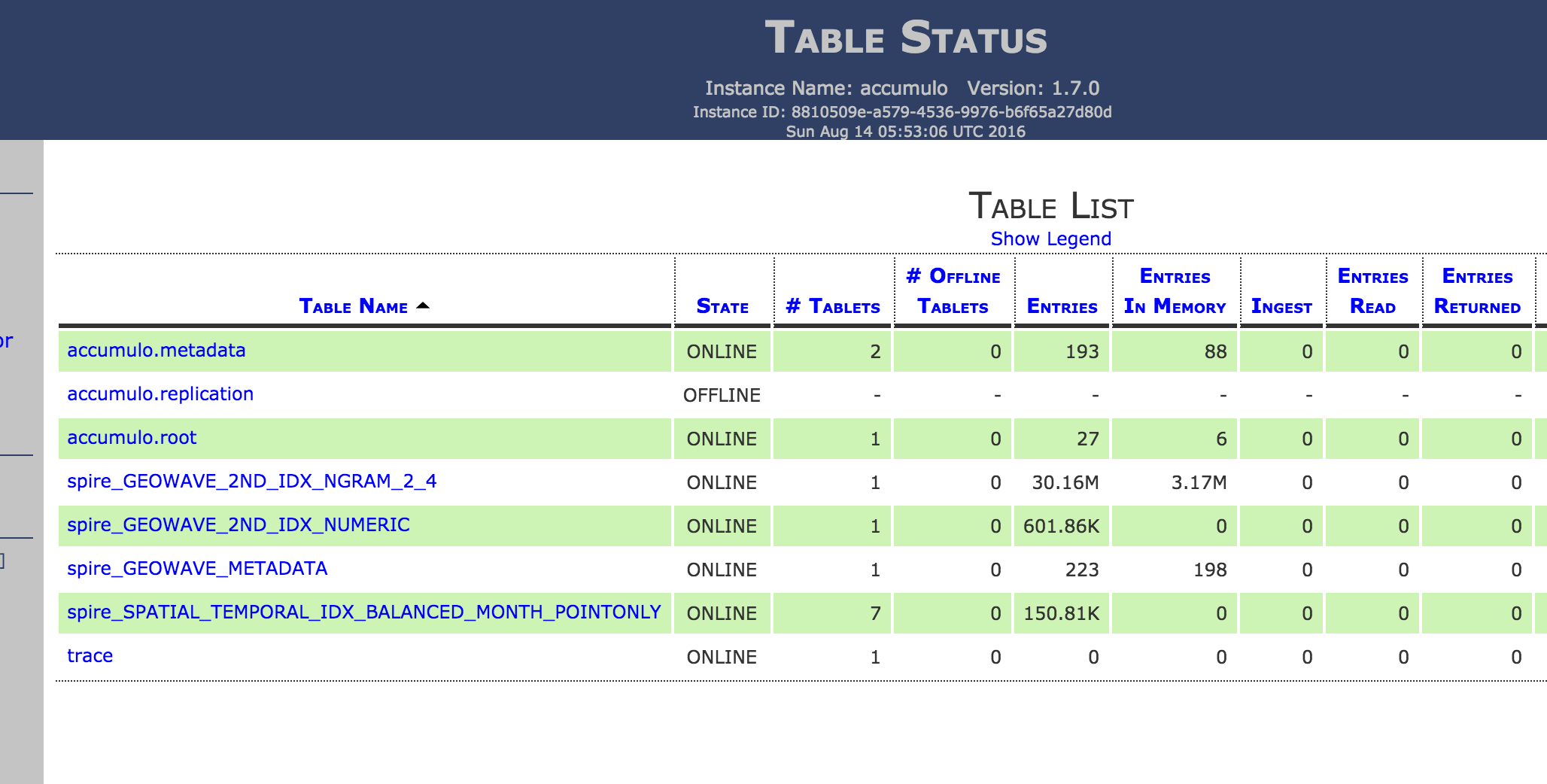

Generally, Accumulo should actually be a really good fit for your use case of real-time ingest while querying. The times to concern yourself with query performance degradation is when the tables are actively running minor or major compaction. If that happens to be occurring after you kill the spark ingest perhaps that could explain it, but it seems awfully coincidental. You can force compaction (programmatically or through Accumulo shell) at any time if there are low usage time windows. Looking at the current code for a text-based attribute secondary index I can see that when the NGram secondary index is used, a HyperLogLog statistic is added to the metadata table (the statistic is used for query planning). Perhaps, it may help to force compaction on the *_GEOWAVE_METADATA table periodically or prior to testing the query? Programmatically this can be done like this, after constructing an Accumulo Connector:

connector.tableOperations().compact("spire_GEOWAVE_METADATA", null, null, true, true);

As some background, when stats are written to GeoWave's metadata table an Accumulo combiner

is used to merge them together (so every call to close the writer will flush a new set of statistics accumulated locally as new netires in the table) and typical/default statistics are very inexpensive to merge, but its not clear to me how expensive or not

merging HyperLogLog stats can be. When compaction is run, the table is re-written with the merged results. So you should see the METADATA table grow in size of entries and shrink after compaction.

Hopefully that helps.