Hi Team,

I recently switched from Vanilla Postgres to Postgres + TimescaleDB (V2.15) using Volttron’s

SQLHistorian Agent for my 5 second resolution data. I have queries in Grafana that aggregate this 5 second data to various resolutions (1m, 5m, or 15m) depending on how long of a period you are viewing. These aggregations are pretty slow, so I would like

to move to the next step of using TimescaleDB’s continuous aggregation feature to improve performance when querying this aggregated data. I know Andrew Rogers has success using this, so im hoping for a little direction on how to proceed.

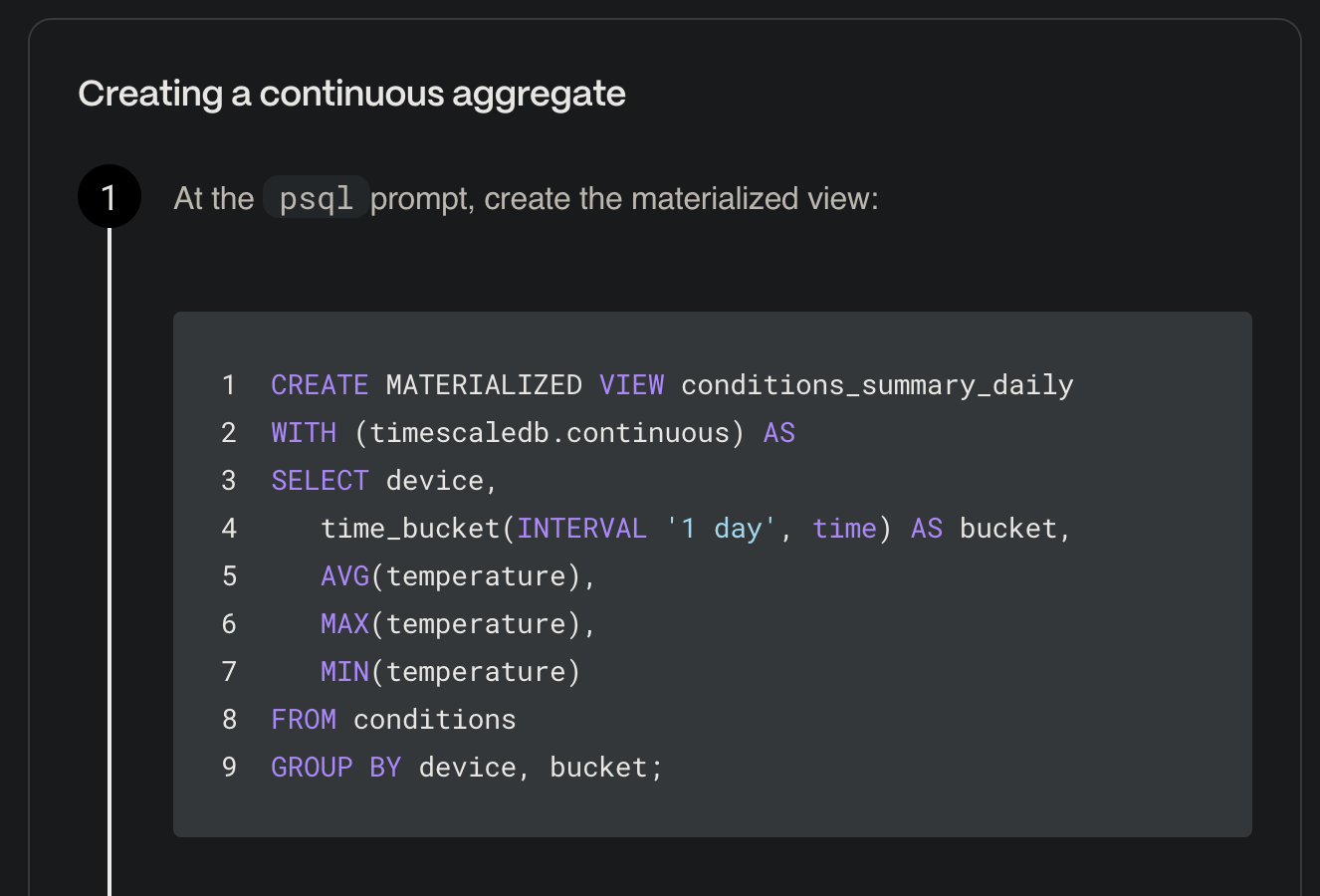

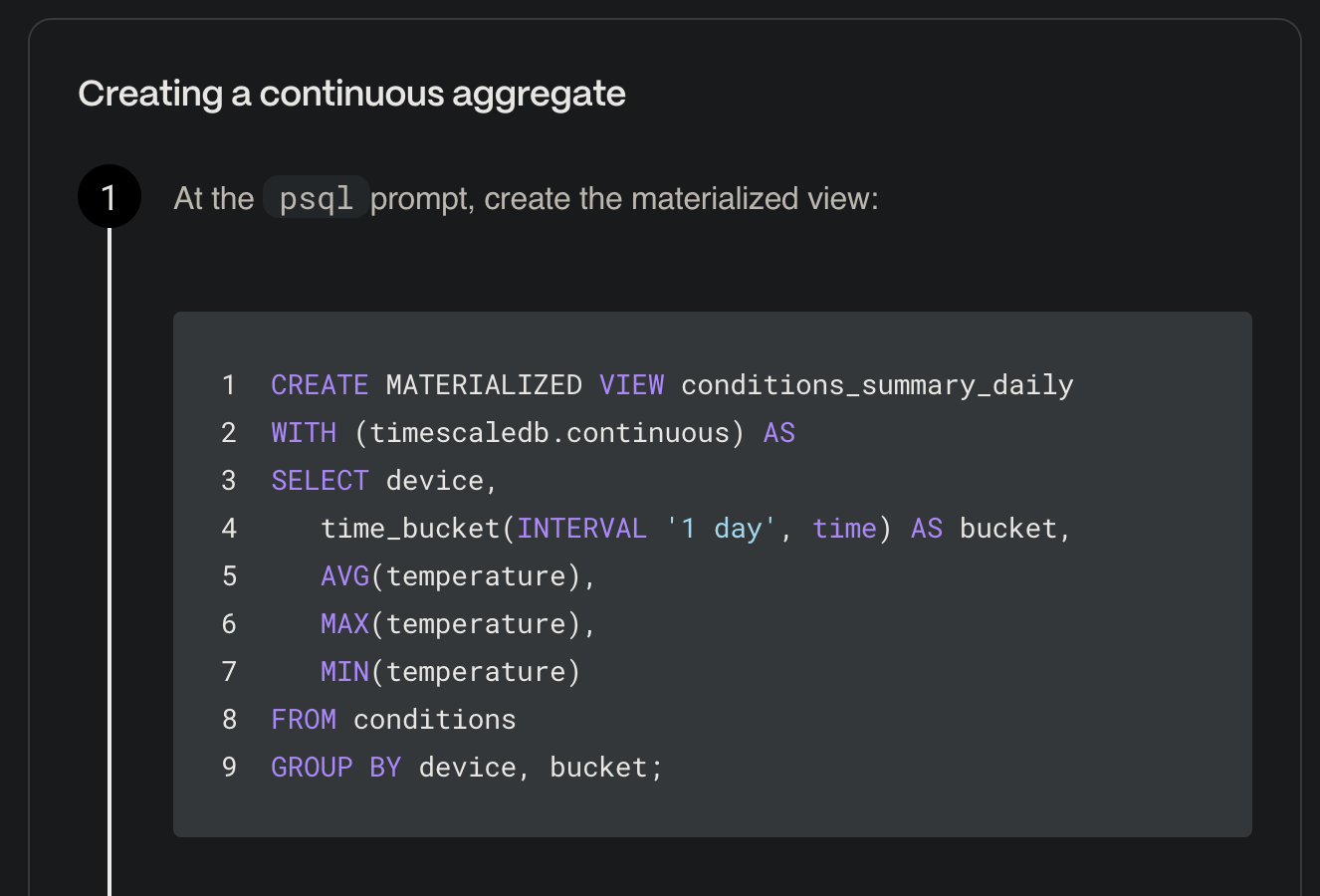

Looking at the Timescale documentation (below) it seems like it should be pretty straight forward

to create one of these continuous aggregations but, given the default structure of the “data” hypertable created by Volttron in my database, I am hung up on how to structure the query to create the continuous aggregation.

For example, my hypertable has the structure of the default Volttron Postgres schema (e.g. ts, topic_id, value_string):

enervenue_stac_bison=# SELECT * FROM timescaledb_information.hypertables;

hypertable_schema | hypertable_name | owner | num_dimensions | num_chunks | compression_enabled | tablespaces

------------------- +----------------- +---------- +---------------- +------------ +--------------------- +-------------

public | data | ener_volt. | 1 | 13 | f |

enervenue_stac_bison=# SELECT * FROM data ORDER BY ts DESC LIMIT 200;

ts | topic_id | value_string

---------------------------- +----------+--------------

2024-09-19 21:30:40.543855 | 46 | 64.6

2024-09-19 21:30:40.543855 | 47 | 64.8

2024-09-19 21:30:40.543855 | 48 | 64.7

2024-09-19 21:30:40.543855 | 49 | 503.3

2024-09-19 21:30:40.543855 | 50 | 504.0

2024-09-19 21:30:40.543855 | 51 | 503.8

2024-09-19 21:30:40.543855 | 52 | 290.7

2024-09-19 21:30:40.543855 | 53 | 290.7

2024-09-19 21:30:40.543855 | 54 | 291.1

2024-09-19 21:30:40.543855 | 55 | 56200

2024-09-19 21:30:40.543855 | 56 | 60.0

2024-09-19 21:30:40.543855 | 57 | 56200

2024-09-19 21:30:40.543855 | 58 | -2200

2024-09-19 21:30:40.543855 | 59 | 1.0

2024-09-19 21:30:40.543855 | 60 | 174935245400

2024-09-19 21:30:40.543855 | 61 | 76.6

2024-09-19 21:30:40.543855 | 62 | 744.3

2024-09-19 21:30:40.543855 | 63 | 57000

2024-09-19 21:30:40.543855 | 64 | 58.92

2024-09-19 21:30:40.543855 | 65 | 64.79

2024-09-19 21:30:40.543855 | 66 | 9

2024-09-19 21:30:40.543855 | 67 | 11

2024-09-19 21:30:40.543855 | 68 | 786432

2024-09-19 21:30:40.543855 | 69 | 4294967295

2024-09-19 21:30:40.543855 | 70 | 0

2024-09-19 21:30:40.543855 | 71 | 0

2024-09-19 21:30:40.543855 | 72 | 0

2024-09-19 21:30:40.543855 | 73 | 0

2024-09-19 21:30:40.543855 | 74 | 124700

2024-09-19 21:30:40.543855 | 75 | 995.0

2024-09-19 21:30:40.543855 | 76 | 700.0

2024-09-19 21:30:40.543855 | 77 | 124700

2024-09-19 21:30:40.543855 | 78 | 124700

2024-09-19 21:30:40.543855 | 79 | 0

2024-09-19 21:30:40.543855 | 80 | 449

2024-09-19 21:30:40.543855 | 81 | 1.0

2024-09-19 21:30:40.543855 | 82 | 0

2024-09-19 21:30:40.543855 | 83 | 0

2024-09-19 21:30:40.543855 | 84 | 124700

2024-09-19 21:30:40.543855 | 85 | 100

2024-09-19 21:30:40.543855 | 86 | 117

2024-09-19 21:30:40.543855 | 87 | 0

2024-09-19 21:30:40.543855 | 88 | 0

2024-09-19 21:30:40.543855 | 89 | 0

2024-09-19 21:30:40.543855 | 90 | 175.0

2024-09-19 21:30:40.543855 | 91 | 175.0

2024-09-19 21:30:40.543855 | 92 | 3

2024-09-19 21:30:40.543855 | 93 | 950.0

2024-09-19 21:30:40.543855 | 94 | 16423

2024-09-19 21:30:40.218954 | 141 | 124.4

2024-09-19 21:30:40.218954 | 142 | 124.7

2024-09-19 21:30:40.218954 | 143 | 9.1

2024-09-19 21:30:40.218954 | 144 | 86.1

2024-09-19 21:30:40.218954 | 145 | 215.6

2024-09-19 21:30:40.218954 | 146 | 123.5

2024-09-19 21:30:40.218954 | 147 | 117.6

2024-09-19 21:30:40.218954 | 148 | 15.2

2024-09-19 21:30:40.218954 | 149 | 5.3

2024-09-19 21:30:40.218954 | 150 | 4.9

2024-09-19 21:30:40.218954 | 151 | 0

2024-09-19 21:30:40.218954 | 152 | 3.4

2024-09-19 21:30:40.218954 | 153 | 89.5

2024-09-19 21:30:40.218954 | 154 | 100.0

2024-09-19 21:30:40.218954 | 155 | 60.0

2024-09-19 21:30:40.218954 | 156 | -0.6

2024-09-19 21:30:40.218954 | 157 | 0

2024-09-19 21:30:40.218954 | 158 | 0

2024-09-19 21:30:40.218954 | 159 | -0.1

2024-09-19 21:30:40.218954 | 160 | -0.3

2024-09-19 21:30:40.218954 | 161 | -0.6

2024-09-19 21:30:40.218954 | 162 | 0

2024-09-19 21:30:40.218954 | 163 | -0.1

2024-09-19 21:30:40.218954 | 164 | 0.7

2024-09-19 21:30:40.218954 | 165 | 0.6

2024-09-19 21:30:40.218954 | 166 | 0

2024-09-19 21:30:40.218954 | 167 | 1.1

2024-09-19 21:30:40.218954 | 168 | -86.3

2024-09-19 21:30:40.218954 | 169 | 6.0

2024-09-19 21:30:40.218954 | 170 | 100.0

It’s not clear to me how I need to adjust the continuous aggregation creation query from the documentation to create the continuous aggregation from my hypertable.

Can someone please share their experience with setting this up? To put all my questions in one place:

- Is it possible to apply continuous aggregations directly to this Volttron created “data” table or do I need to have a more custom structure to this data to leverage continuous aggregations?

- Technically, aren’t all the “value_string” values strings? Does this cause trouble with the continuous aggregations?

- I would really like to create these continuous aggregations across all 186 topics in my database, not just for select columns like the documentation example seems to do. Is this

possible?

Thank you!

Joe Thompson

Technical Leader

Electric Power Research Institute

Energy Storage and Distributed Generation

(912) 663-3407

*** This email message is for the sole use of the intended recipient(s) and may contain information that is confidential, privileged or exempt from disclosure under applicable law. Unless otherwise expressed in this message by the sender or except as may be

allowed by separate written agreement between EPRI and recipient or recipient’s employer, any review, use, distribution or disclosure by others of this message is prohibited and this message is not intended to be an electronic signature, instrument or anything

that may form a legally binding agreement with EPRI. If you are not the intended recipient, please contact the sender by reply email and permanently delete all copies of this message. Please be advised that the message and its contents may be disclosed, accessed

and reviewed by the sender's email system administrator and/or provider. ***