Hi

Emilio,

I’m

trying to create hbase importer which can import Polygons

stored as WKT in CSV files.

Everything

is building fine but when I try to import file I can see

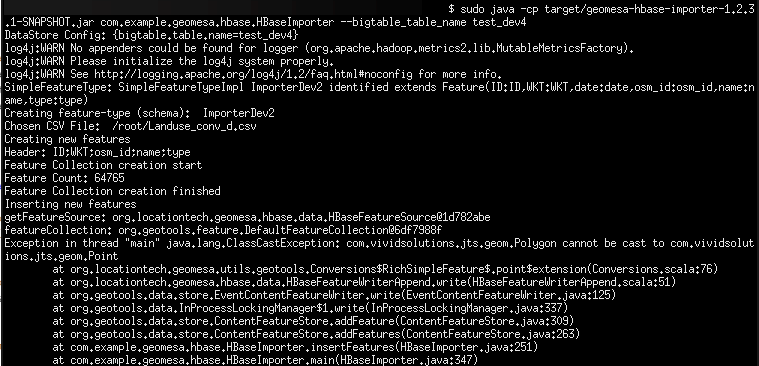

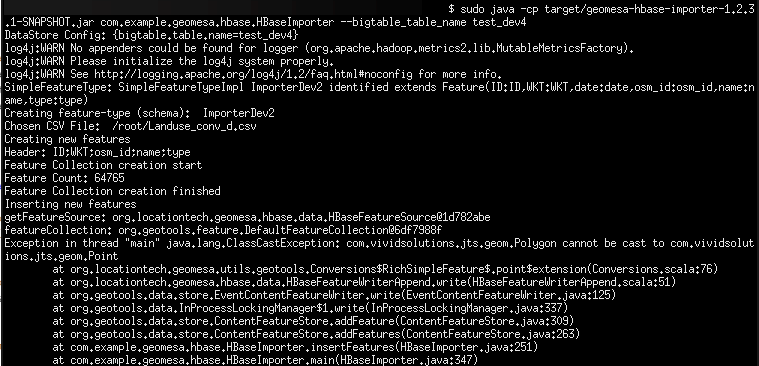

Exception like my geometry was taken as Point :

Exception

in thread “main” java.lang.ClassCastException:

com.vividsolutions.jts.geom.Polygon cannot be cast to

com.vividsolutions.jts.geom.Point

I

can’t find solution for this problem.

Application

code:

/**

* Copyright 2016 Commonwealth Computer

Research, Inc.

*

* Licensed under the Apache License, Version

2.0 (the License);

* you may not use this file except in

compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed

to in writing, software

* distributed under the License is

distributed on an AS IS BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied.

* See the License for the specific language

governing permissions and

* limitations under the License.

*/

//

package com.example.geomesa.hbase;

import com.beust.jcommander.internal.Lists;

import com.google.common.base.Joiner;

import com.vividsolutions.jts.geom.Geometry;

import com.vividsolutions.jts.geom.Polygon;

import com.vividsolutions.jts.geom.Point;

import

com.vividsolutions.jts.geom.GeometryFactory;

import com.vividsolutions.jts.io.WKTReader;

import org.apache.commons.cli.*;

import org.geotools.data.*;

import org.geotools.factory.Hints;

import

org.geotools.feature.DefaultFeatureCollection;

import

org.geotools.feature.FeatureCollection;

import org.geotools.feature.FeatureIterator;

import org.geotools.feature.SchemaException;

import

org.geotools.feature.simple.SimpleFeatureBuilder;

import org.geotools.filter.text.cql2.CQL;

import

org.geotools.filter.text.cql2.CQLException;

import

org.geotools.geometry.jts.JTSFactoryFinder;

import org.joda.time.DateTime;

import org.joda.time.DateTimeZone;

import

org.locationtech.geomesa.utils.text.WKTUtils$;

import

org.geotools.swing.data.JFileDataStoreChooser;

import org.opengis.feature.Feature;

import

org.opengis.feature.simple.SimpleFeature;

import

org.opengis.feature.simple.SimpleFeatureType;

import org.opengis.filter.Filter;

import java.io.IOException;

import java.io.Serializable;

import java.io.File;

import java.io.BufferedReader;

import java.io.BufferedReader.*;

import java.io.FileReader;

import java.io.FileReader.*;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Random;

public class HBaseImporter {

static String TABLE_NAME =

"bigtable.table.name".replace(".", "_");

// sub-set of parameters that

are used to create the HBase DataStore

static String[]

HBASE_CONNECTION_PARAMS = new String[]{

TABLE_NAME

};

/**

* Creates a common set of

command-line options for the parser. Each option

* is described separately.

*/

static Options

getCommonRequiredOptions() {

Options options = new

Options();

Option tableNameOpt =

OptionBuilder.withArgName(TABLE_NAME)

.hasArg()

.isRequired()

.withDescription("table

name")

.create(TABLE_NAME);

options.addOption(tableNameOpt);

return options;

}

static Map<String,

Serializable> getHBaseDataStoreConf(CommandLine cmd) {

Map<String ,

Serializable> dsConf = new HashMap<>();

for (String param :

HBASE_CONNECTION_PARAMS) {

dsConf.put(param.replace("_",

"."), cmd.getOptionValue(param));

}

System.out.println(

"DataStore Config: " + dsConf);

return dsConf;

}

static SimpleFeatureType

createSimpleFeatureType(String simpleFeatureTypeName)

throws SchemaException {

// list the attributes that

constitute the feature type

List<String> attributes

= Lists.newArrayList(

"ID:Integer",

"*WKT:Polygon:srid=4326", //

the "*" denotes the default geometry (used for indexing)

//"What:java.lang.Long", //

some types require full qualification (see DataUtilities

docs)

"date:Date",

// a date-time field is optional,

but can be indexed

"osm_id:String",

"name:String",

"type:String"

);

// create the bare

simple-feature type

String

simpleFeatureTypeSchema = Joiner.on(",").join(attributes);

SimpleFeatureType

simpleFeatureType =

DataUtilities.createType(simpleFeatureTypeName,

simpleFeatureTypeSchema);

System.out.println(

"SimpleFeatureType: " + simpleFeatureType);

return simpleFeatureType;

}

static FeatureCollection

createNewFeatures(SimpleFeatureType simpleFeatureType,

File file) throws IOException {

/*

* We create a

FeatureCollection into which we will put each Feature

created from a record

* in the input csv data file

*/

DefaultFeatureCollection

featureCollection = new DefaultFeatureCollection();

/*

* GeometryFactory will be

used to create the geometry attribute of each feature (a

Point

* object for the location)

*/

GeometryFactory

geometryFactory =

JTSFactoryFinder.getGeometryFactory(null);

SimpleFeatureBuilder

featureBuilder = new

SimpleFeatureBuilder(simpleFeatureType);

WKTReader georeader = new

WKTReader( geometryFactory );

java.io.FileReader infile =

new java.io.FileReader(file);

java.io.BufferedReader reader

= new java.io.BufferedReader(infile);

try {

/* First line of the data

file is the header */

String line =

reader.readLine();

System.out.println("Header: "

+ line);

System.out.println( "Feature Collection creation start");

int lines = 0;

for (line =

reader.readLine(); line != null; line = reader.readLine())

{

if (line.trim().length() >

0) { // skip blank lines

String tokens[] =

line.split("\\;");

int ID =

Integer.parseInt(tokens[0].trim());

//Geometry WKT = WKTUtils$.MODULE$.read(tokens[1]);

Polygon WKT = (Polygon)

georeader.read(tokens[1]);

String osm_id =

tokens[2].trim();

String name =

tokens[3].trim();

String type =

tokens[4].trim();

featureBuilder.add(ID);

featureBuilder.add(WKT);

featureBuilder.add(osm_id);

featureBuilder.add(name);

featureBuilder.add(type);

SimpleFeature feature =

featureBuilder.buildFeature(null);

featureCollection.add(feature);

/* System.out.println( ". " +

feature.getProperty("ID").getValue() + "|" +

feature.getProperty("WKT").getValue() + "|" +

feature.getProperty("osm_id").getValue() + "|" +

feature.getProperty("name").getValue() + "|" +

feature.getProperty("type").getValue());*/

lines++;

}

}

System.out.println( "Feature Count: " + lines);

} finally {

reader.close();

System.out.println( "Feature Collection creation

finished");

return

featureCollection;

}

}

static void

insertFeatures(String simpleFeatureTypeName,

DataStore

dataStore,

FeatureCollection featureCollection)

throws IOException {

FeatureStore featureStore =

(FeatureStore)dataStore.getFeatureSource(simpleFeatureTypeName);

System.out.println("getFeatureSource: " + featureStore);

System.out.println("featureCollection: " +

featureCollection);

featureStore.addFeatures(featureCollection);

System.out.println("Insert

finished");

}

public static void main(String[] args) throws

Exception {

// find out where -- in HBase

-- the user wants to store data

CommandLineParser parser =

new BasicParser();

Options options =

getCommonRequiredOptions();

CommandLine cmd =

parser.parse( options, args);

// verify that we can see

this HBase destination in a GeoTools manner

Map<String,

Serializable> dsConf = getHBaseDataStoreConf(cmd);

DataStore dataStore =

DataStoreFinder.getDataStore(dsConf);

assert dataStore != null;

// establish specifics

concerning the SimpleFeatureType to store

String simpleFeatureTypeName

= "ImporterDev2";

SimpleFeatureType

simpleFeatureType =

createSimpleFeatureType(simpleFeatureTypeName);

// write Feature-specific

metadata to the destination table in HBase

// (first creating the table

if it does not already exist); you only need

// to create the FeatureType

schema the *first* time you write any Features

// of this type to the table

System.out.println("Creating

feature-type (schema): " + simpleFeatureTypeName);

dataStore.createSchema(simpleFeatureType);

File file =

JFileDataStoreChooser.showOpenFile("csv", null);

if (file == null) {

//System.out.println("Chosen CSV File: " + file);

//.getSelectedFile().getName()

return;

}

System.out.println("Chosen

CSV File: " + file);

// create new features

locally, and add them to this table

System.out.println("Creating

new features");

FeatureCollection

featureCollection = createNewFeatures(simpleFeatureType,

file);

System.out.println("Inserting

new features");

insertFeatures(simpleFeatureTypeName,

dataStore, featureCollection);

}

}

Hi Marcin,

The command line tools currently support accumulo and (to a

lesser degree) kafka. For ingesting data into hbase, you have

to use the geotools API for creating simple features. We have

several tutorials on how to do this using accumulo - those

would be a good place to start. You should be able to adapt

them to hbase by replacing the accumulo data store with an

hbase data store. Because they are both geotools DataStore

implementations, almost all of the code would remain the same.

Single process ingest/query:

http://www.geomesa.org/documentation/tutorials/geomesa-quickstart-accumulo.html

Map/reduce ingest:

http://www.geomesa.org/documentation/tutorials/geomesa-examples-gdelt.html

Streaming ingest using storm:

http://www.geomesa.org/documentation/tutorials/geomesa-quickstart-storm.html

The hbase documentation describes how to get an hbase data

store:

http://www.geomesa.org/documentation/user/hbase_datastore.html

Thanks,

Emilio

On 07/04/2016 04:34 AM, Marjasiewicz,

Marcin wrote:

Line

Tools and HBase

Good morning,

I would like to ask a

question about using Command Line Tools with HBase. Is it

supported or it is only tool for Accumulo?

If not what is proper

format to storage geometry in HBase? For example if I will

import CSV with geometry as WKT will it work with Geomesa

Hbase Geoserver plugin?

I have already

configured Geoserver plugin to work with my HBase. Now I

am looking for some solution to load geospatial data into

it.

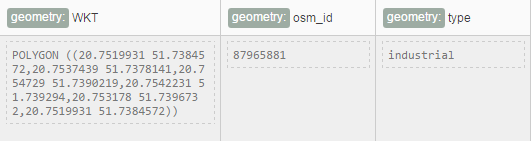

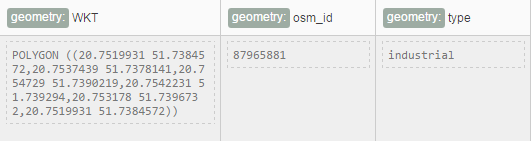

My sample data row in

HBase table looks that:

Best Regards

_______________________________

Marcin Marjasiewicz

_______________________________________________

geomesa-users mailing list

geomesa-users@xxxxxxxxxxxxxxxx

To change your delivery options, retrieve your password, or unsubscribe from this list, visit

https://www.locationtech.org/mailman/listinfo/geomesa-users