Automated GUI tests as part of the project quality journey

Quality isn't a place, it's a journey – and many of the stops and sights along the way are similar for each team. In this article, I'll introduce some of the landmarks we've seen in the Jubula team as well as in teams where BREDEX consultants are active in helping customers on their own journeys. The focus is on steps teams take when they want to add automated GUI tests to their process.

The search for information

The quality journey can be seen as a search for information. I like using the definition of testing that says: "We test to gain information so that we can make decisions" (source). One type of information is whether changes have negatively affected the quality of the software (regression testing). Another type of information is whether new features are correctly implemented, and what the customer wanted (acceptance testing).

GUI tests as one of many possible journeys

There are many different approaches and levels for testing. One focus area at BREDEX is on customer-facing tests: system tests and user acceptance tests on a predominantly functional level. This is logical since we develop individual customer applications that are accessed via user interface, and we provide test consulting to customers with similar applications.

We use Jubula for automated testing in many of our projects, which makes sense for us because:

- It gets the whole team thinking – and talking – about quality from a user perspective: non-programmer team members can use it too, and the tests can be used as a communication basis for stakeholders.

- It tests applications just like a real user would. So we can perform automated GUI-level acceptance and system testing and get constant feedback about whether the requirements are correct from a user perspective.

- It fits in well with the whole process; it has continuous integration support and integration with test management (ALM) tools. The keyword-driven approach also means that tests are well-designed and therefore suited to growing and changing with development.

The automated testing journey begins

Of course it's important to select your test automation tool(s) to fit your quality aims, your team, your process and your application. But there are aspects of test automation that have to be considered regardless of your tooling. And this is where the journey begins.

1. Write some tests

OK, so the first step is usually to actually write some tests. I'm not going to go into detail on this except to mention two things:

- Actually physically writing a test is relatively easy. It's important though, to make sure that the test you've written won't just run now. It'll run later too, and will be easy to update and adapt. This means synchronization, naming conventions, and structuring and categorization of e.g. keywords. Read more.

- Based on the idea that tests should provide information, it's important to put some work into designing Test Cases to correspond to your requirements and use cases. Your tests should provide information you actually need!

2. Run some tests

Once you've got some tests, you'll want to run as a part of an unattended build & test process. If you don't already have continuous integration, this is the time to start thinking about it. Once you have a CI process, then it's time to make it shinier by adding continuous testing to it.

It's true that GUI tests take much more time to run than unit or integration tests. Nevertheless, the information they provide is highly important and useful, and it's worth being able to run them automatically. It's unlikely that you'll run them after every commit, but you may want to run many of them on a nightly basis, and perhaps some at the weekend, for example.

The generic steps for including GUI tests in your CI are:

- Prepare the test environment: reset any data for your backend, ensure that the correct version of the tests will be run (e.g. by getting the test script from version control), and build your AUT (your application under test).

- Prepare your (virtual) test instance: start the machine and "enslave" it, log in as the test user, clean up any data from previous runs, deploy your AUT and configure it. We highly recommend using dedicated test machines that are as close to your target environment as possible, and deploying the AUT in the same (or a similar) way to how your users will. If you produce a single deployable artefact, it is beneficial to your manual testing, your automated testing and to your release process.

- Run your tests: with Jubula, this step involves calling the testexec tool to specify which tests should be run with which configuration.

- Finalize: once all tests have run, you can collect log entries, and tear down the environment.

Steps b) and c) can be executed on parallel machines at the same time to perform, e.g. cross platform testing. The individual parts of step a) can be done in parallel as well.

3. Woo hoo results! What to do with them?

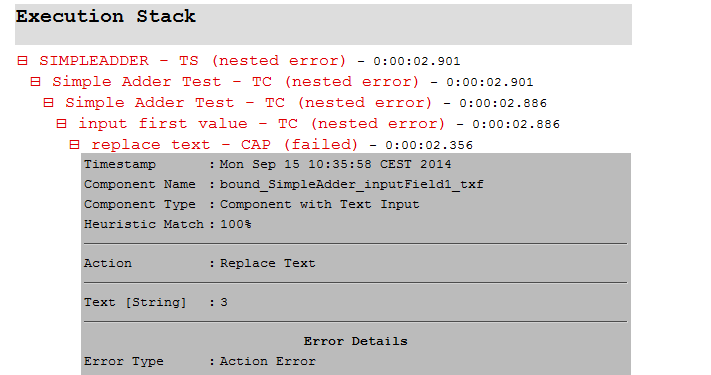

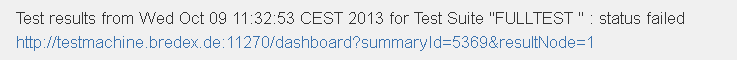

If all has gone to plan, then you can view your test results when you come into work in the morning. In Jubula, that means that there are results available in the database as well as in HTML form. You can see what went wrong and screenshots of error situations. If there were problems in the build/test run, or if your test results show errors, then it's important to deal with them quickly. After all, collecting information then not using it is relatively pointless.

Since one of the aims of automated testing is to find errors when they are still easy and cheap to fix, we react to any problems in the tests / test infrastructure with the highest priority. We fix things we've broken before moving on with other tasks.

4. Maximizing information from results

Once you get used to the idea of having test results every day, it feels uncomfortable and weird not having them. Or not having as many of them as you could. If one Test Case fails, then you don't want the rest of the test to fail because of it. This means designing your tests with the aim that each Test Case is executable independently of any other Test Cases. This can mean various things:

- Each Test Case assumes the same defined start state of the

GUI, and leaves the GUI in this state, either:

- At the end of the Test Case, or

- As part of an error handling that deals with the problem or abandons the Test Case. Read more.

- Test Cases don't require data that is created by another Test Case.

- Test Cases should focus on testing one scenario in a Use Case, and not try to test too much at once.

5. Sharing information from results to stakeholders

It's good to use the information from the tests to guide the current development, but there may also be external stakeholders who are interested in the results. For teams using application lifecycle management (ALM) tools like HP ALM, Jira etc., Jubula can automatically add test results to tasks. As of the next version, attributes on tasks can automatically be adjusted based on test results.

Where are we now, and how does the journey continue?

At this point in the journey, we have the structure to create tests, to run them and to react to and share the information they give. The path ahead can contain routes like "how can we find out what should be tested?", "when do we decide to automate a test?", "what other test levels do we need?", "how do manual and automated tests fit into our strategy?" and "what tests are no longer relevant?".

As I said at the beginning, quality is a journey, and each trek over a mountain may well reveal the next hill to conquer. Fortunately, it is an iterative journey, with benefits to be gained at each stage. Being aware and prepared for these stages at the beginning helps teams to focus their efforts and choose the right path more easily.

About the Authors